Article-level metrics (ALMs) provide a wide range of metrics about the uptake of an individual journal article by the scientific community after publication. They include citations, usage statistics, discussions in online comments and social media, social bookmarking, and recommendations. In this essay, we describe why article-level metrics are an important extension of traditional citation-based journal metrics and provide a number of examples from ALM data collected for PLOS Biology.

The scientific impact of a particular piece of research is reflected in how this work is taken up by the scientific community. The first systematic approach that was used to assess impact, based on the technology available at the time, was to track citations and aggregate them by journal. This strategy is not only no longer necessary since now we can easily track citations for individual articles but also, and more importantly, journal-based metrics are now considered a poor performance measure for individual articles (Campbell, 2008; Glänzel & Wouters, 2013). One major problem with journal-based metrics is the variation in citations per article, which means that a small percentage of articles can skew, and are responsible for, the majority of the journal-based citation impact factor, as shown by Campbell (2008) for the 2004 Nature Journal Impact Factor. Figure 1 further illustrates this point, showing the wide distribution of citation counts between PLOS Biology research articles published in 2010. PLOS Biology research articles published in 2010 have been cited a median 19 times to date in Scopus, but 10% of them have been cited 50 or more times, and two articles (Dickson, Wang, Krantz, Hakonarson, & Goldstein, 2010; Narendra et al., 2010) more than 300 times. PLOS Biology metrics are used as examples throughout this essay, and the dataset is available in the supporting information (Data S1). Similar data are available for an increasing number of other publications and organizations.

# code for figure 1: density plots for citation counts for PLOS Biology

# articles published in 2010

# load May 20, 2013 ALM report

alm <- read.csv("data/alm_report_plos_biology_2013-05-20.csv", stringsAsFactors = FALSE)

# only look at research articles

alm <- subset(alm, alm$article_type == "Research Article")

# only look at papers published in 2010

alm$publication_date <- as.Date(alm$publication_date)

alm <- subset(alm, alm$publication_date > "2010-01-01" & alm$publication_date <=

"2010-12-31")

# labels

colnames <- dimnames(alm)[[2]]

plos.color <- "#1ebd21"

plos.source <- "scopus"

plos.xlab <- "Scopus Citations"

plos.ylab <- "Probability"

quantile <- quantile(alm[, plos.source], c(0.1, 0.5, 0.9), na.rm = TRUE)

# plot the chart

opar <- par(mai = c(0.5, 0.75, 0.5, 0.5), omi = c(0.25, 0.1, 0.25, 0.1), mgp = c(3,

0.5, 0.5), fg = "black", cex.main = 2, cex.lab = 1.5, col = plos.color,

col.main = plos.color, col.lab = plos.color, xaxs = "i", yaxs = "i")

d <- density(alm[, plos.source], from = 0, to = 100)

d$x <- append(d$x, 0)

d$y <- append(d$y, 0)

plot(d, type = "n", main = NA, xlab = NA, ylab = NA, xlim = c(0, 100), frame.plot = FALSE)

polygon(d, col = plos.color, border = NA)

mtext(plos.xlab, side = 1, col = plos.color, cex = 1.25, outer = TRUE, adj = 1,

at = 1)

mtext(plos.ylab, side = 2, col = plos.color, cex = 1.25, outer = TRUE, adj = 0,

at = 1, las = 1)

par(opar)

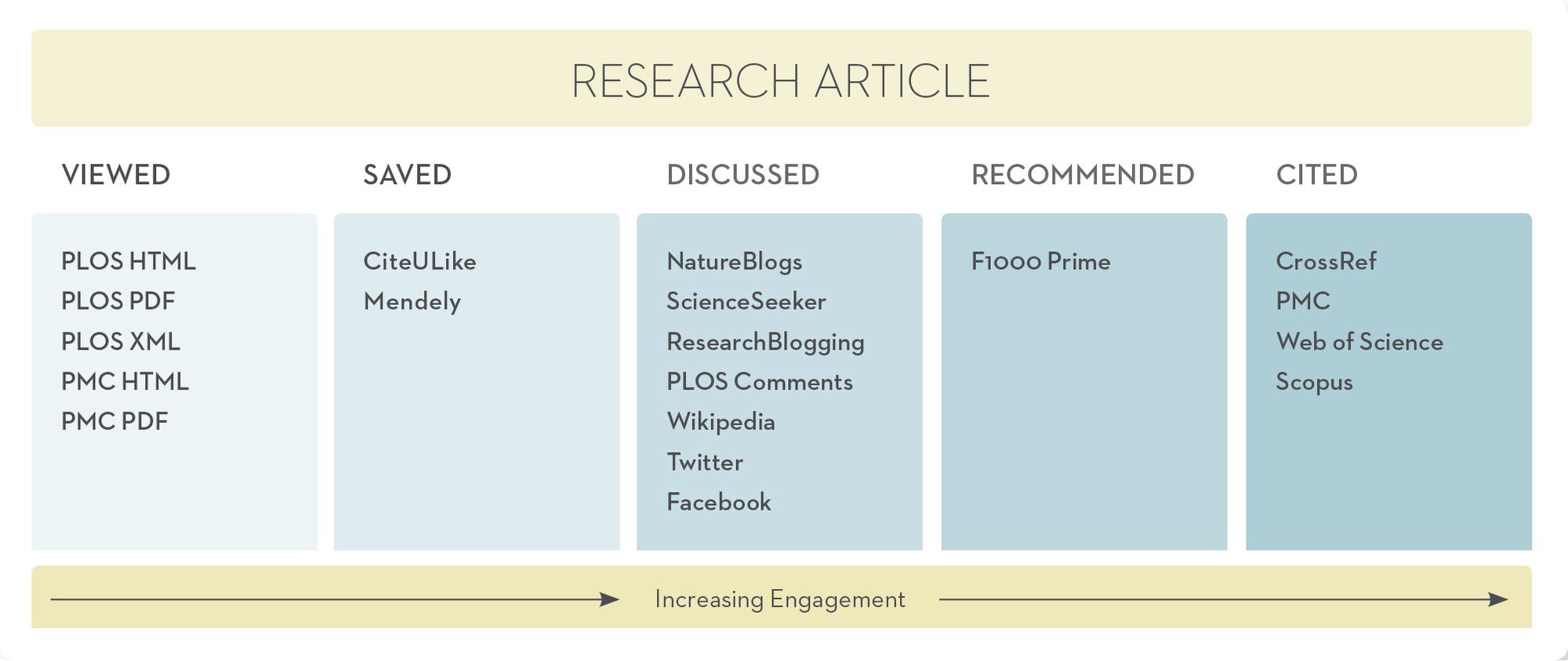

Scientific impact is a multi-dimensional construct that can not be adequately measured by any single indicator (Bollen, Sompel, Hagberg, & Chute, 2009; Glänzel & Wouters, 2013; Schekman & Patterson, 2013). To this end, PLOS has collected and displayed a variety of metrics for all its articles since 2009. The array of different categorised article-level metrics (ALMs) used and provided by PLOS as of August 2013 are shown in Figure 2. In addition to citations and usage statistics, i.e., how often an article has been viewed and downloaded, PLOS also collects metrics about: how often an article has been saved in online reference managers, such as Mendeley; how often an article has been discussed in its comments section online, and also in science blogs or in social media; and how often an article has been recommended by other scientists. These additional metrics provide valuable information that we would miss if we only consider citations. Two important shortcomings of citation-based metrics are that (1) they take years to accumulate and (2) citation analysis is not always the best indicator of impact in more practical fields, such as clinical medicine (Eck, Waltman, Raan, Klautz, & Peul, 2013). Usage statistics often better reflect the impact of work in more practical fields, and they also sometimes better highlight articles of general interest (for example, the 2006 PLOS Biology article on the citation advantage of Open Access articles (Eysenbach, 2006), one of the 10 most-viewed articles published in PLOS Biology).

A bubble chart showing all 2010 PLOS Biology articles (Figure 3) gives a good overview of the year’s views and citations, plus it shows the influence that the article type (as indicated by dot color) has on an article’s performance as measured by these metrics. The weekly PLOS Biology publication schedule is reflected in this figure, with articles published on the same day present in a vertical line. Figure 3 also shows that the two most highly cited 2010 PLOS Biology research articles are also among the most viewed (indicated by the red arrows), but overall there isn’t a strong correlation between citations and views. The most-viewed article published in 2010 in PLOS Biology is an essay on Darwinian selection in robots (Floreano & Keller, 2010). Detailed usage statistics also allow speculatulation about the different ways that readers access and make use of published literature; some articles are browsed or read online due to general interest while others that are downloaded (and perhaps also printed) may reflect the reader’s intention to look at the data and results in detail and to return to the article more than once.

# code for figure 3: Bubblechart views vs. citations for PLOS Biology

# articles published in 2010.

# Load required libraries

library(plyr)

# load May 20, 2013 ALM report

alm <- read.csv("../data/alm_report_plos_biology_2013-05-20.csv", stringsAsFactors = FALSE,

na.strings = c("0"))

# only look at papers published in 2010

alm$publication_date <- as.Date(alm$publication_date)

alm <- subset(alm, alm$publication_date > "2010-01-01" & alm$publication_date <=

"2010-12-31")

# make sure counter values are numbers

alm$counter_html <- as.numeric(alm$counter_html)

# lump all papers together that are not research articles

reassignType <- function(x) if (x == "Research Article") 1 else 0

alm$article_group <- aaply(alm$article_type, 1, reassignType)

# calculate article age in months

alm$age_in_months <- (Sys.Date() - alm$publication_date)/365.25 * 12

start_age_in_months <- floor(as.numeric(Sys.Date() - as.Date(strptime("2010-12-31",

format = "%Y-%m-%d")))/365.25 * 12)

# chart variables

x <- alm$age_in_months

y <- alm$counter

z <- alm$scopus

xlab <- "Age in Months"

ylab <- "Total Views"

labels <- alm$article_group

col.main <- "#1ebd21"

col <- "#666358"

# calculate bubble diameter

z <- sqrt(z/pi)

# calculate bubble color

getColor <- function(x) c("#c9c9c7", "#1ebd21")[x + 1]

colors <- aaply(labels, 1, getColor)

# plot the chart

opar <- par(mai = c(0.5, 0.75, 0.5, 0.5), omi = c(0.25, 0.1, 0.25, 0.1), mgp = c(3,

0.5, 0.5), fg = "black", cex = 1, cex.main = 2, cex.lab = 1.5, col = "white",

col.main = col.main, col.lab = col)

plot(x, y, type = "n", xlim = c(start_age_in_months, start_age_in_months + 13),

ylim = c(0, 60000), xlab = NA, ylab = NA, las = 1)

symbols(x, y, circles = z, inches = exp(1.3)/15, bg = colors, xlim = c(start_age_in_months,

start_age_in_months + 13), ylim = c(0, ymax), xlab = NA, ylab = NA, las = 1,

add = TRUE)

mtext(xlab, side = 1, col = col.main, cex = 1.25, outer = TRUE, adj = 1, at = 1)

mtext(ylab, side = 2, col = col.main, cex = 1.25, outer = TRUE, adj = 0, at = 1,

las = 1)

par(opar)

When readers first see an interesting article, their response is often to view or download it. By contrast, a citation may be one of the last outcomes of their interest, occuring only about 1 in 300 times a PLOS paper is viewed online. A lot of things happen in between these potential responses, ranging from discussions in comments, social media, and blogs, to bookmarking, to linking from websites. These activities are usually subsumed under the term “altmetrics,†and their variety can be overwhelming. Therefore, it helps to group them together into categories, and several organizations, including PLOS, are using the category labels of Viewed, Cited, Saved, Discussed, and Recommended (Figures 2 and 4, see also (Lin & Fenner, 2013)).

# code for figure 4: bar plot for Article-level metrics for PLOS Biology

# Load required libraries

library(reshape2)

# load May 20, 2013 ALM report

alm <- read.csv("../data/alm_report_plos_biology_2013-05-20.csv", stringsAsFactors = FALSE,

na.strings = c(0, "0"))

# only look at research articles

alm <- subset(alm, alm$article_type == "Research Article")

# make sure columns are in the right format

alm$counter_html <- as.numeric(alm$counter_html)

alm$mendeley <- as.numeric(alm$mendeley)

# options

plos.color <- "#1ebd21"

plos.colors <- c("#a17f78", "#ad9a27", "#ad9a27", "#ad9a27", "#ad9a27", "#ad9a27",

"#dcebdd", "#dcebdd", "#789aa1", "#789aa1", "#789aa1", "#304345", "#304345")

# use subset of columns

alm <- subset(alm, select = c("f1000", "wikipedia", "researchblogging", "comments",

"facebook", "twitter", "citeulike", "mendeley", "pubmed", "crossref", "scopus",

"pmc_html", "counter_html"))

# calculate percentage of values that are not missing (i.e. have a count of

# at least 1)

colSums <- colSums(!is.na(alm)) * 100/length(alm$counter_html)

exactSums <- sum(as.numeric(alm$pmc_html), na.rm = TRUE)

# plot the chart

opar <- par(mar = c(0.1, 7.25, 0.1, 0.1) + 0.1, omi = c(0.1, 0.25, 0.1, 0.1),

col.main = plos.color)

plos.names <- c("F1000Prime", "Wikipedia", "Research Blogging", "PLOS Comments",

"Facebook", "Twitter", "CiteULike", "Mendeley", "PubMed Citations", "CrossRef",

"Scopus", "PMC HTML Views", "PLOS HTML Views")

y <- barplot(colSums, horiz = TRUE, col = plos.colors, border = NA, xlab = plos.names,

xlim = c(0, 120), axes = FALSE, names.arg = plos.names, las = 1, adj = 0)

text(colSums + 6, y, labels = sprintf("%1.0f%%", colSums))

par(opar)

All PLOS Biology articles are viewed and downloaded, and almost all of them (all research articles and nearly all front matter) will be cited sooner or later. Almost all of them will also be bookmarked in online reference managers, such as Mendeley, but the percentage of articles that are discussed online is much smaller. Some of these percentages are time dependent; the use of social media discussion platforms, such as Twitter and Facebook for example, has increased in recent years (93% of PLOS Biology research articles published since June 2012 have been discussed on Twitter, and 63% mentioned on Facebook). These are the locations where most of the online discussion around published articles currently seems to take place; the percentage of papers with comments on the PLOS website or that have science blog posts written about them is much smaller. Not all of this online discussion is about research articles, and perhaps, not surprisingly, the most-tweeted PLOS article overall (with more than 1,100 tweets) is a PLOS Biology perspective on the use of social media for scientists (Bik & Goldstein, 2013).

Some metrics are not so much indicators of a broad online discussion, but rather focus on highlighting articles of particular interest. For example, science blogs allow a more detailed discussion of an article as compared to comments or tweets, and journals themselves sometimes choose to highlight a paper on their own blogs, allowing for a more digestible explanation of the science for the non-expert reader (Fausto et al., 2012). Coverage by other bloggers also serves the same purpose; a good example of this is one recent post on the OpenHelix Blog (“Video Tip of the Week: Turkeys and their genomes,” 2012) that contains video footage of the second author of a 2010 PLOS Biology article (Dalloul et al., 2010) discussing the turkey genome.

F1000Prime, a commercial service of recommendations by expert scientists, was added to the PLOS Article-Level Metrics in August 2013. We now highlight on the PLOS website when any articles have received at least one recommendation within F1000Prime. We also monitor when an article has been cited within the widely used modern-day online encyclopedia, Wikipedia. A good example of the latter is the Tasmanian devil Wikipedia page (“Tasmanian devil,” 2013) that links to a PLOS Biology research article published in 2010 (Nilsson et al., 2010). While a F1000Prime recommendation is a strong endorsement from peer(s) in the scientific community, being included in a Wikipedia page is akin to making it into a textbook about the subject area and being read by a much wider audience that goes beyond the scientific community.

PLOS Biology is the PLOS journal with the highest percentage of articles recommended in F1000Prime and mentioned in Wikipedia, but there is only partial overlap between the two groups of articles because they focus on different audiences (Figure 5). These recommendations and mentions in turn show correlations with other metrics, but not simple ones; you can’t assume, for example, that highly cited articles are more likely to be recommended by F1000Prime, so it will be interesting to monitor these trends now that we include this information.

# code for figure 5: Venn diagram F1000 vs. Wikipedia for PLOS Biology

# articles

# load required libraries

library("plyr")

library("VennDiagram")

# load May 20, 2013 ALM report

alm <- read.csv("../data/alm_report_plos_biology_2013-05-20.csv", stringsAsFactors = FALSE)

# only look at research articles

alm <- subset(alm, alm$article_type == "Research Article")

# group articles based on values in Wikipedia and F1000

reassignWikipedia <- function(x) if (x > 0) 1 else 0

alm$wikipedia_bin <- aaply(alm$wikipedia, 1, reassignWikipedia)

reassignF1000 <- function(x) if (x > 0) 2 else 0

alm$f1000_bin <- aaply(alm$f1000, 1, reassignF1000)

alm$article_group = alm$wikipedia_bin + alm$f1000_bin

reassignCombined <- function(x) if (x == 3) 1 else 0

alm$combined_bin <- aaply(alm$article_group, 1, reassignCombined)

reassignNo <- function(x) if (x == 0) 1 else 0

alm$no_bin <- aaply(alm$article_group, 1, reassignNo)

# remember to divide f1000_bin by 2, as this is the default value

summary <- colSums(subset(alm, select = c("wikipedia_bin", "f1000_bin", "combined_bin",

"no_bin")), na.rm = TRUE)

rows <- nrow(alm)

# options

plos.colors <- c("#c9c9c7", "#0000ff", "#ff0000")

# plot the chart

opar <- par(mai = c(0.5, 0.75, 3.5, 0.5), omi = c(0.5, 0.5, 1.5, 0.5), mgp = c(3,

0.5, 0.5), fg = "black", cex.main = 2, cex.lab = 1.5, col = plos.color,

col.main = plos.color, col.lab = plos.color, xaxs = "i", yaxs = "i")

venn.plot <- draw.triple.venn(area1 = rows, area2 = summary[1], area3 = summary[2]/2,

n12 = summary[1], n23 = summary[3], n13 = summary[2]/2, n123 = summary[3],

euler.d = TRUE, scaled = TRUE, fill = plos.colors, cex = 2, fontfamily = rep("sans",

7))

par(opar)

With the increasing availability of ALM data, there comes a growing need to provide tools that will allow the community to interrogate them. A good first step for researchers, research administrators, and others interested in looking at the metrics of a larger set of PLOS articles is the recently launched ALM Reports tool (“ALM Reports,” 2013). There are also a growing number of service providers, including Altmetric.com (“Altmetric.com,” 2013), ImpactStory (“ImpactStory,” 2013), and Plum Analytics (“Plum Analytics,” 2013) that provide similar services for articles from other publishers.

As article-level metrics become increasingly used by publishers, funders, universities, and researchers, one of the major challenges to overcome is ensuring that standards and best practices are widely adopted and understood. The National Information Standards Organization (NISO) was recently awarded a grant by the Alfred P. Sloan Foundation to work on this (“NISO Alternative Assessment Metrics (Altmetrics) Project,” 2013), and PLOS is actively involved in this project. We look forward to further developing our article-level metrics and to having them adopted by other publishers, which hopefully will pave the way to their wide incorporation into research and researcher assessments.

Supporting Information

Data S1. Dataset of ALM for PLOS Biology articles used in the text, and R scripts that were used to produce figures. The data were collected on May 20, 2013 and include all PLOS Biology articles published up to that day. Data for F1000Prime were collected on August 15, 2013. All charts were produced with R version 3.0.0.

Acknowledgments

This is a preprint authored by me and published in PLOS Biology in a peer-reviewed version.

References

- ALM Reports. (2013). Retrieved from http://almreports.plos.org

- Altmetric.com. (2013). Retrieved from http://www.altmetric.com/

- Bik, H. M., & Goldstein, M. C. (2013). An introduction to social media for scientists. PLOS Biology, 11(4), e1001535. doi:10.1371/journal.pbio.1001535

- Bollen, J., Sompel, H. de, Hagberg, A., & Chute, R. (2009). A Principal Component Analysis of 39 Scientific Impact Measures. PLoS ONE, 4(6), e6022. doi:10.1371/journal.pone.0006022

- Campbell, P. (2008). Escape from the impact factor. Ethics in Science and Environmental Politics, 8, 5–7. Journal article. doi:10.3354/esep00078

- Dalloul, R. A., Long, J. A., Zimin, A. V., Aslam, L., Beal, K., Blomberg, L. A., … Reed, K. M. (2010). Multi-platform next-generation sequencing of the domestic turkey (Meleagris gallopavo): genome assembly and analysis. PLOS Biology, 8(9). doi:10.1371/journal.pbio.1000475

- Dickson, S. P., Wang, K., Krantz, I., Hakonarson, H., & Goldstein, D. B. (2010). Rare variants create synthetic genome-wide associations. PLOS Biology, 8(1), e1000294. doi:10.1371/journal.pbio.1000294

- Eck, N. J. van, Waltman, L., Raan, A. F. J. van, Klautz, R. J. M., & Peul, W. C. (2013). Citation analysis may severely underestimate the impact of clinical research as compared to basic research. PLOS ONE, 8(4), e62395. doi:10.1371/journal.pone.0062395

- Eysenbach, G. (2006). Citation advantage of open access articles. PLOS Biology, 4(5), e157. doi:10.1371/journal.pbio.0040157

- Fausto, S., Machado, F. A., Bento, L. F. J., Iamarino, A., Nahas, T. R., & Munger, D. S. (2012). Research blogging: indexing and registering the change in science 2.0. PLOS ONE, 7(12), e50109. doi:10.1371/journal.pone.0050109

- Floreano, D., & Keller, L. (2010). Evolution of adaptive behaviour in robots by means of Darwinian selection. PLOS Biology, 8(1), e1000292. doi:10.1371/journal.pbio.1000292

- Glänzel, W., & Wouters, P. (2013). The dos and don’ts in individudal level bibliometrics. Retrieved from http://de.slideshare.net/paulwouters1/issi2013-wg-pw

- ImpactStory. (2013). Retrieved from http://impactstory.org/

- Lin, J., & Fenner, M. (2013). Altmetrics in Evolution: Defining and Redefining the Ontology of Article-Level Metrics. Information Standards Quarterly, 25(2), 20. doi:10.3789/isqv25no2.2013.04

- Narendra, D. P., Jin, S. M., Tanaka, A., Suen, D.-F., Gautier, C. A., Shen, J., … Youle, R. J. (2010). PINK1 is selectively stabilized on impaired mitochondria to activate Parkin. PLOS Biology, 8(1), e1000298. doi:10.1371/journal.pbio.1000298

- Nilsson, M. A., Churakov, G., Sommer, M., Tran, N. V., Zemann, A., Brosius, J., & Schmitz, J. (2010). Tracking marsupial evolution using archaic genomic retroposon insertions. PLOS Biology, 8(7), e1000436. doi:10.1371/journal.pbio.1000436

- NISO Alternative Assessment Metrics (Altmetrics) Project. (2013). Retrieved from http://www.niso.org/topics/tl/altmetrics/initiative

- Plum Analytics. (2013). Retrieved from http://www.plumanalytics.com/

- Schekman, R., & Patterson, M. (2013). Reforming research assessment. eLife, 2, e00855. doi:10.7554/eLife.00855

- Tasmanian devil. (2013). Retrieved from http://en.wikipedia.org/wiki/Tasmanian\devil

- Video Tip of the Week: Turkeys and their genomes. (2012). Retrieved from http://blog.openhelix.eu/?p=14388