Altmetrics – tools to assess the impact of scholarly works based on alternative online measures such as bookmarks, links, blog posts, etc. –have become a regular topic in this blog. The altmetrics manifesto was published in October 2010, and in the last 18 months we have seen a number of interesting new altmetrics services, including the ScienceCard service that I started six months ago. ScienceCard has been a very interesting learning experience, because I not only had to write the software, but also think about my perspective on altmetrics. Some of my recent thoughts are listed below.

FriendFeed still is a great model for a scholarly service

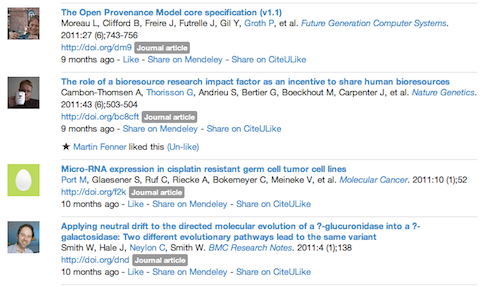

I have stopped using FriendFeed a few months ago in favor of Twitter, but I still very much like the design of the service. I think that the concept should also work very well for altmetrics, and I have therefore continued work on an activity stream for ScienceCard. At http://sciencecard.org/works you find a listing of all recent scholarly works of your ScienceCard friends, and now you can like/comment/share them. ScienceCard friends are the people you follow on Twitter who also have a ScienceCard account and sharing is possible via Mendeley and CiteULike. Comments are still in the testing stage, and Twitter integration is also in the works. I also want to add more scholarly content including Slideshare presentations and blog posts, although the latter are really hard to do in an automated way.

Altmetrics tools should not only present scholarly works and their metrics, but they should also allow users to interact with them via sharing, commenting, etc.

Altmetrics is about search

Altmetrics is really about two related concepts: reputation and discovery. ScienceCard tries to summarize the metrics available about a particular researcher, although much more work needs to be done to show that these numbers correlate with reputation. The discovery aspect of altmetrics is at least as important, and this means that these alternative metrics should help provide better search results. Some bibliographic databases let you sort your search results by number of citations – the problem is of course that citations can have a delay of several years. Download counts, social bookmarks, Twitter links, etc. on the other hand can give information about the impact of a paper within days of publication. The search results can be improved further by personalizing them based on what the friends in your social network are publishing, bookmarking or discussing.

Altmetrics is expensive

It is great to see so many altmetrics grassroots projects. Unfortunately it is resource-intensive and therefore costly to collect altmetrics, in particular if it is almost real-time numbers such as Twitter citations. I’m afraid that many people (including myself) will have a hard time providing this service in a sustainable way. There are two possible solutions: providing altmetrics as a commercial service, and providing altmetrics as a collaborative effort. Although I understand the reasoning behind commercial services, I would very much prefer the open and collaborative approach. Collaboration could mean that several people and/or organizations join forces and run an altmetrics service together, but it could also mean that we break altmetrics into smaller services connected via programming interfaces. A typical altmetrics service has at least these functions:

- an interface to add journal articles and other scholarly objects, whether it is manual input by users or via an API

- a searchable database with metadata about scholarly objects

- a background service that collects metrics from other sources

- a public interface that displays metrics for particular scholarly objects and collections

- an interface for visualization and analysis of aggregate numbers

I don’t think that all five functions (and possibly more) necessarily have to be provided by the same service. I’m sure that there are enough people that are only interested in providing interesting ways to add scholarly objects, or in visualization and analysis. The background service is probably the most boring and resource-intensive part.

I want second-order metrics

Second-order metrics means the metrics for the scholarly works citing a particular paper or for the person bookmarking or tweeting a scholarly work. This approach would add valuable information and is obviously similar to the PageRank algorithm for web links. Unfortunately this approach also creates a lot of extra work, as this means collecting the metrics (or at least some metrics) for all citing works. Again something that makes altmetrics expensive.

Twitter is important

Many of the recent altmetrics discussions have really been about the role of Twitter in scholarly communication. A lot of people are excited about the potential of Twitter to help discover interesting scholarly works, and to allow this within days after publication. It is still too early to know for sure whether highly tweeted papers will be cited more often later on. Better Twitter integration is high on my to do list for ScienceCard.

Yet another bibliographic database

The core function of altmetrics is to build a database with metadata about scholarly objects, including a variety of metrics. There are of course a large number of bibliographic databases already out there, so maybe existing databases can also be extended to include metrics. Ideally the existing database should not be restricted to particular kinds of scholarly works (e.g. journal articles) or disciplines, should be free to use and should allow reuse of the data with an appropriate license. There are several candidates that fit this description, including BibSoup run by the Open Knowledge Foundation and possibly also the Zotero database allowing synchronization with the desktop reference manager. Both services focus on bibliographic collections uploaded by users, whereas my idea of an altmetrics database relies on disambiguated authors and scholarly works.

There are too many altmetrics

It is great that altmetrics increases the variety of available metrics, but too many different metrics can be confusing to users. Although it is interesting that the number of citations for the same work vary widely between PubMed, Web of Science, Scopus, Microsoft Academic Search, Pubmed and Google Scholar, the typical user is probably only interested in one citation count. The same is true for usage metrics and number of social bookmarks. I think it would be helpful to consolidate the metrics about a scholarly work to maybe five numbers, including views/downloads, bookmarks, citations, comments, and tweets. This doesn’t mean that the other information shouldn’t be collected, just that the numbers will be consolidated for display.