Last week I attended the altmetrics12 workshop in Chicago. You can read all 11 abstracts here, and the conference had good Twitter coverage (using the hashtag #altmetrics12), at least until Twitter had a total blackout around 12 PM our time.

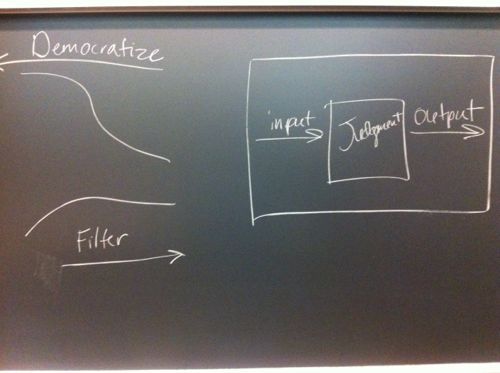

All but two presenters used slides – I have uploaded my presentation to Speaker Deck. Kelli Barr used the blackboard to explain that

- filtering (via altmetrics or any other means) by definition always selects out content and therefore runs against the democratization of science

- peer review is a black box. altmetrics is also a black box, only much bigger

altmetrics12 was one of the best conferences that I have attended recently. The intensity of the discussions was palpable. My only regrets are that there wasn’t more time for discussions, but many of us convened in the bar afterwards.

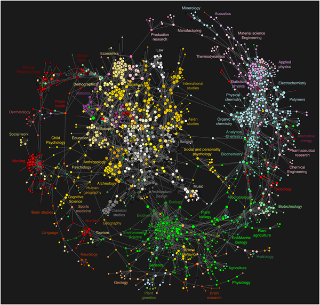

We were off to a very strong start with two excellent keynote presentations by Johann Bollen ( Altmetrics: from usage data to social media) and Gregg Gordon (Alternative is the new Grey). Johann emphasized why it is both important and fascinating to study how science is actually working. He stressed that science is a gift economy, where the currency is acknowledgement of influence in the form of citations. He sees two major problems with the present citation-based analysis of scientific impact: a) data and b) the metrics. Citation data are very domain specific (with very different citation practices in different disciplines), and delayed (several years after publication of the research they are citing). The problem with current citation-based metrics is that they ignore the network effect in science. Johann then went on to explain the MESUR project, which studies the patterns of scientific activity on a very large scale (1 billion usage events, 500 million citations, 50 million papers).

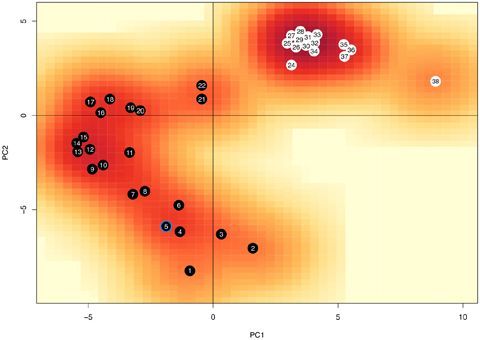

Johann then briefly explained the results of another 2009 PLoS ONE paper where he analyzed 39 metrics with principal component analysis. He found two major components in the metrics analysis: counting vs. social influence and fast vs. slow.

Johann then told the interesting story behind a 2010 paper where he showed that Twitter mood can predict the stock market. The paper was rejected by all publishers and was finally published on ArXiV in October 2010. It immediately became very popular in terms of downloads and media attention (and was eventually published in the Journal of Computational Science in March 2011).

Gregg Gordon has summarized his keynote in a May blogpost, so I will focus on a few highlights. Gregg Gordon runs the Social Science Research Network (SSRN), a leading resource for sharing social sciences research. Gregg thinks that altmetrics can provide the compass to navigate the map of science described earlier. He told us some very interesting anecdotes of users trying to game SSRN by inflating their download counts (e.g. “downloads for donuts”), and mentioned a research paperanalyzing gaming at SSRN. Download counts are apparently taken very seriously by SSRN authors and are also used for hiring decisions. SSRN not only has written software to protect against gaming, but also has a person constantly looking over these numbers (Gregg feels that computer algorithms alone are not enough).

The two keynotes were followed by 11 short (10-15 minute) presentations, including two presentations about the PLoS Article-Level Metrics project. Jennifer Lin spoke about anti-gaming mechanisms and I emphasized the importance of personalizing altmetrics to fully understand the “network of science”. All presentation abstracts are available online.

After the lunch break (and some interesting discussions) we continued with demos of various altmetrics applications and users, including Total Impact, Plum Analytics, altmetric.com, the PLoS Article-Level Metrics application, Knode, Academia.edu, BioMed Central and Ubiquity Press (example here).

In the last our and a half we split up into several smaller group to discuss issues relevant for altmetrics. I was in the standards group and we all agreed that it is too early to sep up rigid standards for this evolving field, but not too early to start the discussion. The two standards experts in our group (Todd Carpenter from NISO and David Baker from CASRAI) were of course very helpful with the discussion in our group. In the closing group discussion the breakout groups reported their major discussion points (which will hopefully be written up by someone). We also learned that the NIH is considering changing the Biosketch format for CVs and is looking for input via a Request for Information (RFI) until June 29.